- Async/sync system

- Random access machine model

- Message passing model

- Shared memory model

Essential Issues of Alogrithm

- Model of computation

- Algorithm design

- Algorithm analysis

Asynchronous & Synchronous System

Asynchronous System. A system is said to be asynchronous if there is no fixed upper bound on how long it takes for a message to be delivered (message delays) or how much time elapses between consectutive steps of a processor (processor step time) [1].

Synchronous System. In the synchronous model processors execute in lockstep: The execution is partitioned into rounds, and in each round, every processor can send a message to each neighbour, the messages are delivered, and every processor computes based on the messages just received. (This model is convenient for designing algorithms) [1]

Why asynchronous systems?

- Sometimes the upper bounds are quite large, are infrequently reached and change over time.

- It is often desiable to design an algorithm that is

independent of any particular timing parameters, namely an asynchronous algorithm- Instead of design an algorithms that depends on the bounds

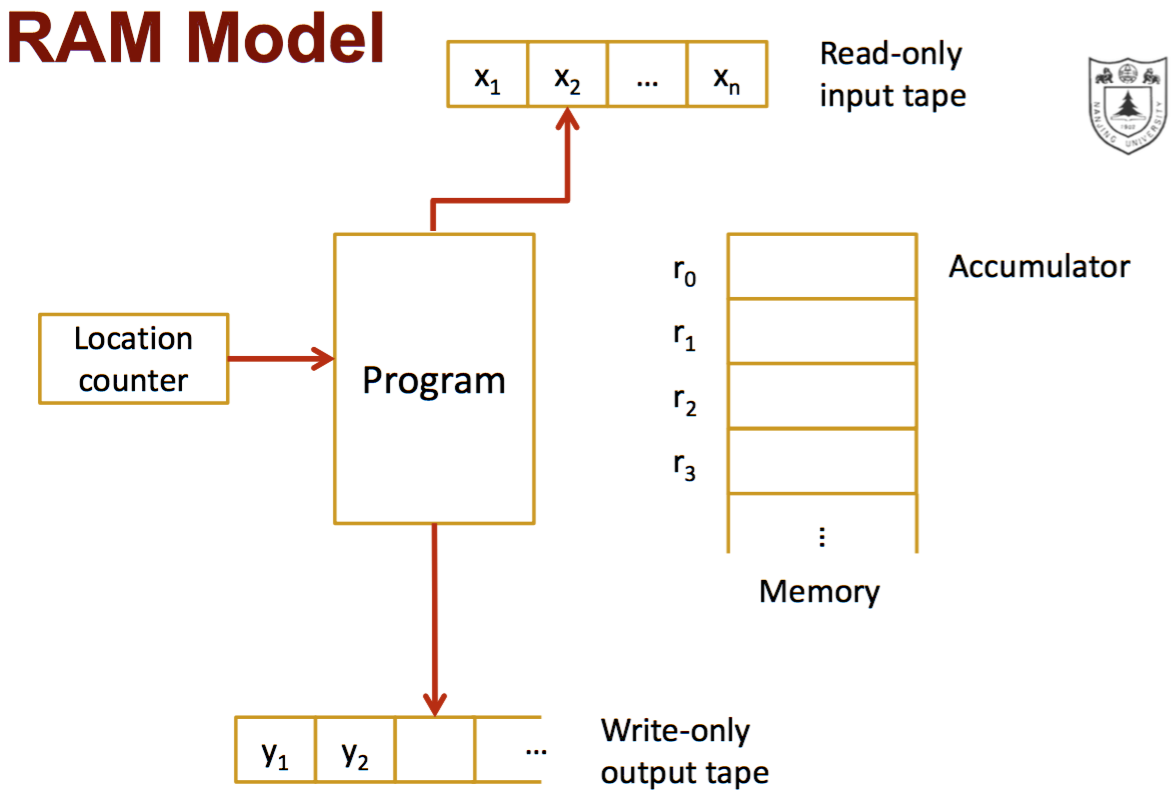

Random Access Machine (RAM) Model

The goal of working with a model computer instead of a real computer is that we want to have a machine, which is as easy as possible, but still let us capture the main aspects of a real computer.

This model of computation is an abstraction that allows us to compare algorithms on the basis of performance. Simplifications for RAM model:

- Simple operations take only 1 time step;

- Loops and subroutines are not simple operations;

- We assume we have as much memory as we need (infinite storage);

- Memory access is considered to be free in terms of time (or one time step?);

- A unit of memory cannot hold an arbitrarily large number.

The RAM model takes no notice of whether an item is in cache or on the disk, which simplifies the analysis. It is an excellent model for understanding how an algorithm will perform on a real computer. It strikes a fine balance by capturing the essential behavior of computers while being simple to work with. We use the RAM model because it is useful in practice.

Relationship between the Turing Machine and RAM Models

A random-access machine with unbounded indirection is equivalent to a Turing machine. Informally speaking, both machines have the same computational capabilities. (wikipedia | Equivalance of RAM and Turing Machines)

Message Passing Model

The architecture is used to communicate data among a set of processors without the need for a global memory. Each processor has its own local memory and communicates with other Processors using message.

Data exchanged among processors cannot be shared; it is rather copied (using send/receive messages). An important advantage of this form of data exchange is the elimination of the need for synchronization constructs, such as semaphores, which results in performance improvement.

Shared Memory Model

Both SMP and DSM are shared address space platforms.

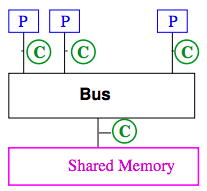

Symmetric Multiprocessors (SMP)

Processors all connected to a large shared memory. Examples are processors connected by crossbar, or multicore chips. It is symmetric because the access time from any of the CPUs to memory is the same.

Key characteristics is uniform memory acess (UMA).

Caches are a problem: need to be kept coherrent = when one CPU changes a value in memory, then all other CPUs will get the same value when they access it. All caches will show a coherent value.

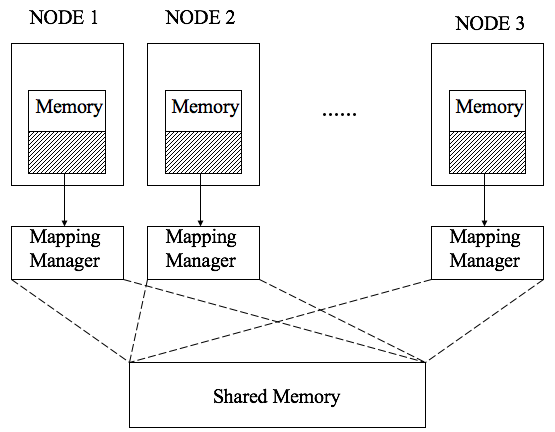

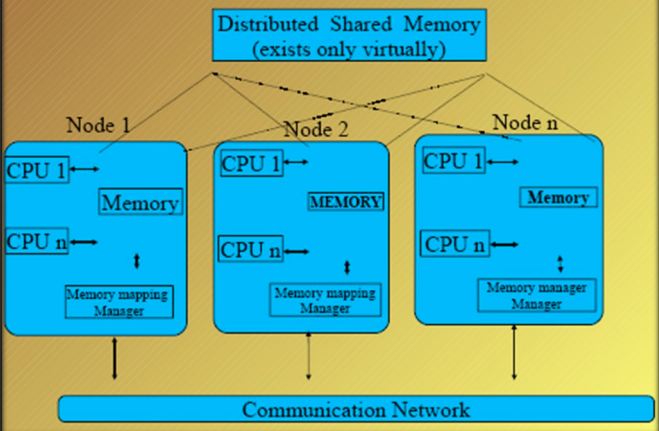

Distributed Shared Memory (DSM)

DSM is basically an abstraction that integrates the local memory of different machine into a single logical entity shared by cooperating processes.

- The distributed shared memory implements the shared memory model in distributed systems, which have no physical shared memory. (shared memory exists only virtually, similar concepts to virtual memory)

- The shared memory model provides a virtual address space shared between all nodes

- The overcome the high cost of communication in distributed systems, DSM systems move data to the location of access.

How?

- Data moves between main memory and secondary memory (within a node) and between main memories of different nodes.

- Each data object is owned by a node

- Initial owner is the node that created object

- Ownership can change as object moves from node to node

- When a process accesses data in the shared address space, the mapping manager maps shared memory address to physical memory (local or remote).

Shared Memory v.s. Message Passing

| Messsge Passing | Shared Memory | |

|---|---|---|

| who does communication | Programmer | Automatic |

| Data distribution | Manual | Automatic |

| HW support | Simple | Extensive (automatically figures out when to send data, to whom and where to cache in, etc.) |

| Programming | ||

| Correctness | Difficult | Less Difficult |

| Performance | Difficult (noce you get correctness, performance is not far away) | Very Difficult |

References

[1] Attiya, Hagit, and Jennifer Welch. Distributed computing: fundamentals, simulations, and advanced topics. Vol. 19. John Wiley & Sons, 2004.

[2] 分布式系统(Distributed System)资料

[3] Shared Memory, NYU Computer Science

[4] 分布式算法(黄宇)课程主页

[5] Message Passing Vs Shared Memory - Georgia Tech - HPCA: Part 5